Cross media research: Multichannel measurement

- share

- copy link

This article shares some of Millward Brown's experiences in evaluating integrated communications. Its cross-media studies usually include three to four media with a digital component. A range of solutions is needed to fit a client's needs, both from within existing brand tracking programmes and via tailored studies. However, the same best practice is followed in all cases. A clean baseline read of people's awareness and attitudes in the absence of any campaign exposure is essential. It is also vital to account for a medium's audience predisposition to the brand, to split apart the value of the medium's natural audience profile from the power of the client's communications.

Millward Brown

Investment in cross-media research pays dividends in ensuring the optimum use of channels in maximising a campaign's ROI

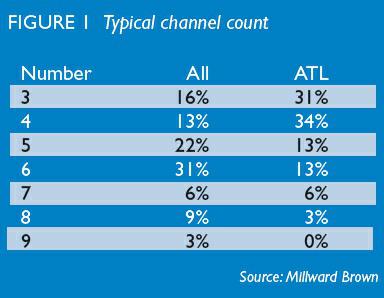

This article shares some experiences from evaluating integrated communications and how that helps us add real value in terms of actionable insight to clients. By virtue of our client base, the campaigns we are commissioned to evaluate are larger scale and multimedia. Globally, all the cross-media studies we conduct generally include three to four media with a digital component in nearly all cases. Looking beyond bought advertising media, we usually pull apart five to six channels that include direct, ‘owned’ and ‘earned’ media (Figure 1).

The inclusion of digital so often delivers a vital integrated view of its value. We conduct many studies best described as digital ‘deep dives’, driven partly by the industry silo focus. Reviewing whether a specific activity can add something on top of the composite of everything else is important to attract media budgets. Equally, optimisation within channel requires this sort of focus. But this leaves clients unclear about how to plan digital in the overall mix, and whilst cross-media evaluation approaches may not have the measurement detail of online behavioural streams, they do give a view of digital channels as part of an integrated approach to communications planning.

For our most advanced clients, integrated communications are not limited to multimedia campaigns. Their campaigns sit in a wider context of ongoing programmes around brand ideas/themes, content and social engagement. We are asked to evaluate the brand value of these ongoing programmes, the individual campaigns, plus the individual activities within those campaigns. It's quite a list, but with good sampling, a good survey instrument and multivariate modelling at the respondent level, it is possible.

There is a wide range of evaluation briefs to answer and a single solution is not right for all. It is vital to determine what the client really wants to look at. For example, is it proof of performance for one activity, for certain mixes, or a holistic view of the contribution of all campaign elements? What depth of optimisation are they looking for – simply a ‘yes/no’ to using that media again, or an optimisation of spend considering cost, reach, frequency, synergy and timing?

The three most common themes and responses are:

We are often asked simply to evaluate whether relatively ‘chunky’ media investments are doing anything noticeable in a campaign. We might do this simply by modelling general tracking study respondents who recognise different media, or are tagged as online exposed.

We are often asked to look at whether one media or a specific mix – often with a smaller reach – is worth investing in and to what end. This is usually done with a tailored sample, cell-based study, where other media influences are controlled for but not investigated in their own right.

We could be asked to evaluate and optimise multilayered communications across brands and their variants using lots of media with an ongoing thematic programme around fashion supported through digital social media. This involves a larger, digitally enhanced longitudinal study with lots of modelling, including path work to test word-of-mouth/social comment as both an out-take of communications and an influence on brand attitudes.

In practice, a range of solutions is needed to fit a client's needs, both from within existing brand tracking

programmes and via tailored studies.

While one solution does not fit all, we do follow the same best practices in all circumstances, and this is important. A clean baseline read of people's awareness and attitudes in the absence of any campaign exposure is vital. Because most campaigns have a pretty high reach, the unexposed are a small and untypical group of people, who do not represent the base levels for the rest of the population. So, in practical terms, this means taking a pre-read.

It is also vital to understand and account for a medium's audience predisposition to the brand, to split apart the value of the medium's natural audience profile from the power of the client's communications running through it. Both are important aspects of the value of a medium, but without this being done, the profile of the audience – being perhaps more in favour of the brand in the first place -could be mistaken as a communication effect and mislead the advertiser.

Discounting other influences is vital to isolate true media effects; in very many cases, word-of-mouth has an effect on results, as do retail activity, media comment and economic factors.

An area of ongoing debate is how accurately any of us can determine exposure, which is the foundation of planning and buying. Claimed recognition can struggle to distinguish certain media accurately in highly integrated campaigns, whereas an Opportunities to See (OTS) based approach is closest to planning currencies and also helps us to look at synergies and frequency effects. We do use recognition when relevant, but our preference is to use cookies or tagging for online, and estimate OTS for other media through surveys, modelling and calibration to industry surveys for offline.

There are so many things that can be measured and it is interesting how many different things clients want us to look at. The challenge is to determine what should be measured, and for what purpose, rather than measure everything that can be measured and treat it all in the same way.

A useful categorisation separates measures of advertising engagement (outtakes) and measures of brand engagement (outcomes). The accountability task is to robustly quantify the contribution of spend/exposure to brand outcomes – relying on outtakes to help us understand why certain relationships are more successful than others, but not relying on them as the end-game.

The digital communications revolution has flooded the market with communications engagement metrics (out-takes) such as dwell-time, interaction and ‘friending’. These measures are easy to collect, move over time in interesting ways and deliver input to dashboards that give a sense of being in control. But they are only symptoms of the real and final brand outcome.

Common currencies for measuring across different media help us to break down planning and evaluation silos, and integrate wider media types. In brand engagement evaluation, common currencies have emerged and – especially in the case of Millward Brown's OTS-based approach – deliver insight on reach, frequency and overlap across paid, owned and earned media

Evaluating campaigns properly is so hard that most effort goes into execution and far less is spent on the value of decisions it supports. A simple and very potent thing to do is to reflect on the media budget value of the advice given

For a regular TV advertiser, we saw that their second waves of TV showed a quicker build to optimum frequency levels, reaching diminishing returns faster. Understanding this means this client can deploy TV at lighter weights for longer, thus covering more purchase intervals; the nominal return on $1 million media spend would therefore

be $500k.

In another case, a powerful pre-tested ad showed fast response at lower frequencies than actually deployed in one market, needing a third less GRPs to reach optimum efficiency. The nominal value per $1m media spend here was $300k, but the real value was much higher, since this advice was applied with success to other countries.

In a few cases, we have seen perfectly good outdoor creative have no effect, simply because it was phased before any TV 'pre-cooking'. In fact, generally speaking, we see non-TV media benefit a lot from TV priming, which does have mix and scheduling implications. In this case, when one client put things right in their next campaign, the outdoor communications shone through. With a typical outdoor campaign costing roughly $900k, this is a valuable insight.

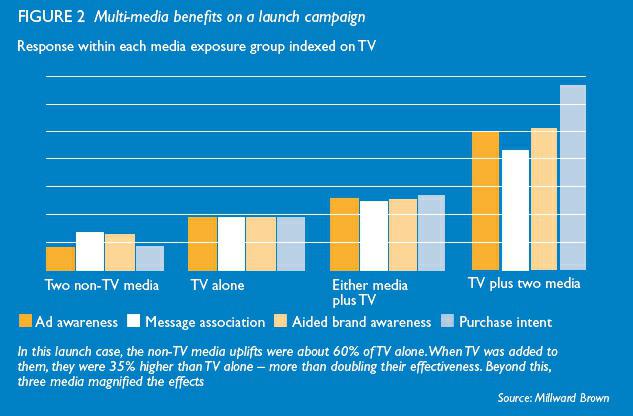

Particularly in launches – or other ‘tough’ tasks – we see the highest response among two to three overlapping media (we call this magnification). In one case, without altering budget splits by media, a very modest optimisation to maximise overlap was worth a minimum of $100,000 per $1m media budget – obviously too late for that campaign but vital learning for future launches (Figure 2).

CONCLUSION

Effectiveness evaluation has come a long way in recent years and keeping a firm eye on client needs and communications trends will help it to deliver valuable advice into the future. More clients will devote more research budget to good quality cross-media research. It pays back on a campaign-bycampaign basis and over the longer term.

We enormously undersell the value of the advice we give in this area, perhaps finding it too audacious to push the types of examples given in this article. But not doing this leaves us justifying our efforts on a cost-plus, commodity basis, when in fact our know-how and experience, along with the data, actively contribute to decisions that can drive future brand growth

.jpg)

.jpg)